Creating a first person shooter with one million players

I'm Glenn Fiedler and welcome to Más Bandwidth, my new blog at the intersection of game network programming and scalable backend engineering.

Back in high school I played iD software games from Wolfenstein to DOOM and Quake. Quake was truly a revelation. I still remember Q1Test when you could hide under a bridge in the level and hear the footsteps of a player walking above you.

That was 1996. Today it's 2024. My reflexes are much slower, I have slightly less hair, and to be honest, I really don't play first person shooters anymore – but I'm 25 years in on my game development career and now I'm one of the top world experts in game netcode. I've even been fortunate enough to have worked on several top tier first person shooters: Titanfall and Titanfall 2, and some of my code is still active in Apex Legends.

Taking a look at person shooters in 2024, there seems to be two main genres: massive online battle arenas like PUBG, Fortnite and Apex Legends (60-100 players), and team combat games like Counterstrike (5v5), Overwatch (6v6) and Valorant (5v5).

Notably absent are the first person shooters with thousands of players promised by Improbable back in 2014:

"Imagine playing a first-person shooter like Call of Duty alongside thousands of other players from across the world without having to worry about latency. Or a game where your actions can trigger persistent reactions in the universe that will affect all other players. This is what gaming startup Improbable hopes to achieve."

- Wired (https://www.wired.com/story/improbable/)

What's going on? Is it really not possible, or was Improbable's technology just a bunch of hot air? In this article we're going to find out if it's possible to make a first person shooters with thousands of players – and we're going to do this by pushing things to the absolute limit.

One Million Players

"Would someone tell me how this happened? We were the fucking vanguard of shaving in this country. The Gillette Mach3 was the razor to own. Then the other guy came out with a three-blade razor. Were we scared? Hell, no. Because we hit back with a little thing called the Mach3Turbo. That's three blades and an aloe strip. For moisture. But you know what happened next Shut up, I'm telling you what happened—the bastards went to four blades. Now we're standing around with our cocks in our hands, selling three blades and a strip. Moisture or no, suddenly we're the chumps. Well, fuck it. We're going to five blades.

- The Onion

When scaling backend systems a great strategy is to aim for something much higher than you really need. For example, my startup Network Next is a network acceleration product for multiplayer games, and although it's incredibly rare for any game to have more than a few million players at the same time, we load test Network Next up to 25M CCU.

Now when a game launches using our tech and it hits a few hundred thousand or million players it's all very simple and everything just works. There's absolutely no fear that some issue will show up due to scale because we've already pushed it much, much further than it will ever be used in production.

Let's apply the same approach to first person shooters. We'll aim for 1M players and see where we land. After we scale to 1M players, I'm sure that building a first person shooter with thousands of players will seem really easy.

Player Simulation at Scale

The first thing we need is a way to perform player simulation at scale. In this context simulation means the game code that takes player inputs and moves the player around the world, collides with world geometry, and eventually also lets the player aim and shoot weapons.

Looking at both CPU usage and bandwidth usage, it's clearly not possible to have one million players on one server. So let's create a new type of server, a player server.

Each player server handles player input processing and simulation for n players, we don't know what n is yet, but this way we can scale horizontally to reach the 1M player mark, by having 1M / n player servers.

The assumptions necessary to make this work are:

- The world is static

- Each player server has a static representation of the world that allows for collision detection to be performed between the player and the world

- Players do not physically collide with each other

Player servers take the player input and delta time (dt) for each client frame and step the player state forward in time. Players are simulated forward only when input packets arrive from the client that owns the player, and these packets correspond to actual display frames on the client machine. There is no global tick on a player server. This is similar to how most first person shooters in the Quake netcode model work. For example, Counterstrike, Titanfall and Apex Legends.

Let's assume player inputs are sent on average at 100HZ, and each input is 100 bytes long. These inputs are sent over UDP because they're time series data and are extremely latency sensitive. All inputs must arrive, or the client will see mis-predictions (pops, warping and rollback) because the server simulation is authoritative.

We cannot use TCP for input reliability, because head of line blocking would cause significant delays in input delivery under both latency and packet loss. Instead, we send the most recent 10 inputs in each input packet, thus we have 10X redundancy in case of any packet loss. Inputs are relatively small so this strategy is acceptable, and if one input packet is dropped, the very next packet 1/100th of a second later contains the dropped input PLUS the next input we need to step the player forward

First person shooters rely on the client and server running the same simulation on the same initial state, input and delta time and getting (approximately) the same result. This is known as client side prediction, or outside of game development, optimistic execution with rollback. So after the player input is processed, we send the most recent player state back to the client. When the client receives these player state packets, it rolls back and applies the correction in the past, and invisibly re-simulates the player back up to present time with stored inputs.

You can see the R&D source code for this part of the FPS here: https://github.com/mas-bandwidth/fps/blob/main/001/README.md

Player Simulation Results

The results are fascinating. Not only is an FPS style player simulation for 1M players possible, it's cost effective, perhaps even more cost effective than a current generation FPS shooter with 60-100 players per-level.

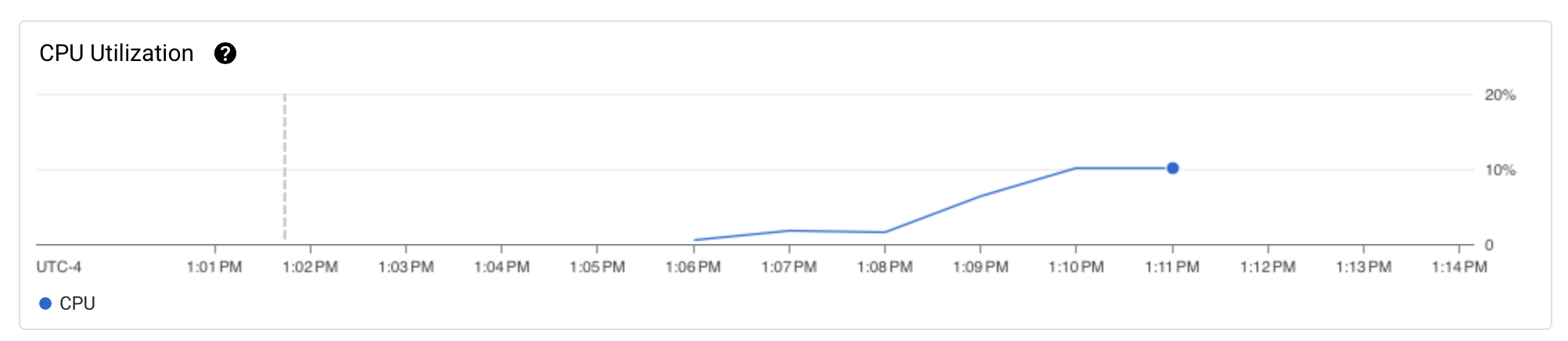

Testing indicates that we can process 8k players per-32 CPU bare metal machine with XDP and a 10G NIC, so we need 125 player servers to reach 1 million players.

At a cost of a just $1,870 USD per-month per-player server (datapacket.com), this gives a total cost of $233,750 USD per-month,

Or... just 23.4c per-player per-month.

Not only this, but the machines have plenty of CPU left to perform more complicated player simulations:

Verdict: DEFINITELY POSSIBLE.

World Servers

First person shooter style player simulation with client side prediction at 1M players is solved, but each client is only receiving their own player state back from the player server. To actually see, and interact, with other players, we need a new concept, a world server.

Once again, we can't just have one million players on one server, so we need to break the world up in some way that makes sense for the game. Perhaps it's a large open world and the terrain is broken up into a grid, with each grid square being a world server. Maybe the world is unevenly populated and dynamically adjusting voronoi regions make more sense. Alternatively, it could be sections of a large underground structure, or even cities, planets or solar systems in some sort of space game. Maybe the distribution has nothing to do with physical location.

The details don't really matter, but the important thing is we need to distribute the load of players interacting with other objects in the world across a number of servers somehow, and we need to make sure players are evenly distributed and don't all clump up on the same server.

For simplicity let's go with the grid approach. Assume that design has a solution for keeping players fairly evenly distributed around the world. We have a 100km by 100km world and each grid cell is 10km squared. You would be able to do an amazing persistent survival FPS like DayZ in such a world.

Each world server could be a 32 CPU bare metal machine with a 10G NIC, like the player servers, so they will each cost $1,870 USD per-month. For a 10x10 grid of 1km squared we need 100 world servers, so this costs $187,000 USD per-month, or 18.7c per-player, per-month. Add this to the player server cost, and we have a total cost of 42.1c per-player per-month. Not bad.

How does this all work? First, the player servers need to regularly update the world server with the state of each player. The good news is that while the "deep" player state sent back to the owning client for client side prediction rollback and re-simulation is 1000 bytes, the "shallow" player state required for interpolation and display in the remote view of a player is much smaller. Let's assume 100 bytes.

So 100 times per-second after the player simulation completes, the player server forwards the shallow player state to the world server. We have 10,000 players per-world server, so we can do the math and see that this gives us 10,000 x 100 x 100 = 100 million bytes per-second, or 800 million bits per-second, or 800 megabits per-second, let's round up and assume it's 1Gbit/sec. This is easily done.

Now the world server must track a history, let's say a one second history of all player states for all players on the world server. This history ring buffer is used for lag compensation and potentially also delta compression. In a first person shooter, when you shoot another player on your machine, the server simulates the same shot against the historical position of other players near you, so they match their positions as they were on your client when you fired your shot. Otherwise you need to lead your shot by the amount of latency you have with the server, and this is not usually acceptable in top-tier competitive FPS.

Let's see if we have enough memory for the player state history. 10,000 players per-world server, and we need to store a history of each player for 1 second at 100HZ. Each player state is 100 bytes, so 100 x 100 x 10,000 = 100,000,000 bytes of memory required or just 100 megabytes. That's nothing. Let's store 10 seconds of history. 1 gigabyte. Extremely doable!

Now we need to see how much bandwidth it will cost to send the player state down from the world server directly down to the client (we can spoof the packet in XDP so it appears that it comes from the player server address, so we don't have NAT issues).

10,000 players with 100 byte state each, and we have 1Gbit/sec for player state. This is probably a bit high for a game shipping today (to put it mildly), but with delta compression let's assume we can get on average an order of magnitude reduction in snapshot packet sizes, so we can get it down to 100mbps.

And surprisingly... it's now quite possible. It's still definitely on the upper end of bandwidth to be sure, but according according to Neilsen's Law, bandwidth available for high end connections increases each year by 50% CAGR, so in 10 years you should have around 57 times the bandwidth you have today.

Verdict: DEFINITELY POSSIBLE.

World Database

So far we have solved both for player simulations with FPS style client side prediction and rollback, and we can see 10,000 other players near us – all with a reasonable cost and requiring 1mbps up and 100mbps down to synchronize player inputs, predicted player state, and the state of other players.

But something is missing. How do players interact with each other?

Typically a first person shooter would simulate the player on the server, see the "fire" input held down during a player simulation step and shoot out a raycast in the view direction. This raycast would be tested against other players in the world and the first object in the world hit by that raycast would have damage applied to it. Then the next player state packet sent back to the client that got hit would include health of zero and would trigger a death state. Congratulations. You're dead. Cue the kill replay.

But now when the player simulation is updated on a player server, in all likelihood the other players that are physically near it in the game world are not on the same player server. Player servers are simply load balanced – evenly distributing players across a number of player servers, not assigning players to player servers according to where they are in the world.

So we need a way for players to interact with each other, even though players are on different machines. And this is where the world server steps in.

The world server has a complete historical record of all other player states going back one second. If the player simulation can call out asynchronously call out to one or more world servers "raycast and find first hit", and then "apply damage to <object id>" then I think we can actually make this whole thing work.

So effectively what we have now is something that looks something like, Redis for game servers. A in-memory world database that scales horizontally with a rich set of primitives so players interact with the world and other objects in it. Like Redis, calls to this world database would be asynchronous. Because of this, players server would need one goroutine (green thread) per-player. This goroutine would block on async IO to the world database and yield to other player goroutines do their work while waiting for a response.

Think about it. Each player server has just 8,000 players on it. These players are distributed across 32 CPUs. Each CPU has to deal with only 250 players total, and these CPUs have 90% of CPU still available to do work because they are otherwise IO bound. Running 250 goroutines per-CPU and blocking on some async calls out to world servers would be incredibly lightweight relative to even the most basic implementation of a HTTP web server in Golang. It's totally possible, somebody just needs to make this world database.

Verdict: DEFINITELY POSSIBLE.

Game State Delivery

Each world server generates a stream of just 100 mbit/sec containing all player state for 10,000 players @ 100HZ (assuming delta compression against a common baseline once every second), and we have 100 world servers.

100 mbit x 100 = 10 gbit generated per-second.

But there are 10,000 players per-world server. And each of these 10,000 players need to receive the same 100mbit/sec stream of packets from the world server. Welcome to O(n^2).

If we had real multicast over the internet, this would be a perfect use case. But there's no such thing. Potentially, a relay network like Network Next or Steam Datagram Relay could be the foundation of a multicast packet delivery system with 100G+ NICs on relays on major points round the world, or alternatively a traditional network supporting multicast could be built internally with PoPs around the world like Riot Direct.

Verdict: Expensive and probably the limiting factor for the moment. Back of the envelope calculations show that this increases the cost per-player per-month from cents to dollars. But if you already had the infrastructure available and running, it should be cost effective to implement this today. Companies that could do this include Amazon, Google, Valve, Riot, Meta and Microsoft.

Conclusion

Not only is it possible to create a first person shooter with a million players, but the per-player bandwidth is already within reach of high end internet connections in 2024.

The key limiting factor is the distribution of game state down to players due to lack of multicast support over the internet. A real-time multicast packet delivery system would have to be implemented and deployed worldwide. This would likely be pretty expensive, and not something that an average game developer could afford to do.

On the game code side there is good news. The key missing component is a flexible, game independent world database that would enable the leap from hundreds to at least thousands of players. I propose that creating this world database would be a fantastic open source project.

When this world database is combined with the architecture of player servers and world servers, games with 10,000 to 1M players become possible. When a real-time multicast network emerges and becomes widely available, these games will become financially viable.