What is lag?

I'm Glenn Fiedler and welcome to Más Bandwidth, my new blog at the intersection of game network programming and scalable backend engineering.

You've spent years of your life working on a multiplayer game, then you launch, and your subreddit is full of complaints about lag. Should you just go and integrate a network accelerator like Network Next to fix it?

Absolutely not.

Lag doesn't always mean latency. These days players use "lag" to describe anything weird or unexpected. The player might just have had low frame rate, whiffed an attack, or got hit when they thought they blocked.

Before considering a network accelerator for your multiplayer game, it's important to first exclude any causes of "lag" that have nothing to do with the network. A network accelerator can't fix these problems. That's up to you.

In this article I share a comprehensive list of things I've personally experienced masquerading as lag in production, and what you can do to fix them. I hope this helps you fix some cases of "lag" in your game!

TCP

Problem: You're sending your game network traffic over TCP.

While acceptable for turn-based multiplayer games, TCP should never be used for latency sensitive multiplayer games. Why? Because in the presence of latency and packet loss, head of line blocking in TCP induces large hitches in packet delivery. There is no fix for this when using TCP, it's just a property of reliable-ordered delivery.

Note that if you're using WebSockets or HTTP for your game, these are built on top of TCP and have the same problem. Consider switching to WebRTC which is built on top of UDP.

As a side-note, it's possible to terminate TCP at the edge, so the TCP connection and any resent packets are only between the player and an edge node close to them. While this improves things, it doesn't fix everything. Some players, especially in South America, Middle East, APAC and Africa will still get high latency and packet loss between their client and the edge node, causing hitches. For example, I've seen players in São Paulo get 250ms latency and 20% packet loss to servers in... São Paulo. The internet is just not great at always taking the lowest latency route, even when the server is right there.

Solution: Use a UDP-based protocol for your game. UDP is not subject to head of line blocking and you can design your network protocol so any essential data is included in the very next packet after one packet is lost. This way you don't have to wait for retransmission. For example, you can include all unacked inputs or commands in every UDP packet.

Low frame rate on client

Problem: You have low frame rate on your client.

This is something I see a lot on games that support both current and previous gen consoles. On the older consoles, clients can't quite keep up with simulation or rendering and frame rate drops. Low frame rate increases the delay between inputs and corresponding action in your game, which some players feel as lag, especially if the low frame rate occurs in the middle of a fight.

Solution: Make sure you hit a steady 60fps on the client. Implement analytics that track client frame rate so you can exclude it as a cause of "lag". Be aware that it's common for new content to be released post-launch (like a new level or items) that tank frame rate, especially on older platforms, so make sure you launch with instrumentation that lets you track this over time and have enough contextual information so you can find cases where particular characters, levels or items cause low frame rate.

Hitching on client or server

Problem: You have sporadic hitches on the client or server.

Hitches can happen in your game for many reasons. There could be a delay loading in a streaming asset, contention on mutex, or just a bug where some code takes a lot more time to run than expected.

Hitches on the client interrupt play and cause a visible stutter that players feel as lag. Long hitches can also shift the client back in time relative to the server, causing inputs to be delivered too late for the server to use them, leading to rollbacks and corrections on the client.

Hitches on the server are worse. Packets sent from the server are delayed, causing a visual shutter across all clients. If the hitch is long enough, clients are shifted forward in time relative to the server, causing inputs from clients to be delivered too early for the server to use them – once again leading to rollbacks and corrections.

Solution: Implement debug functionality to trigger hitches on the client and server, and make sure your netcode handles them well. Track not just low frame rate in your analytics, but also when hitches occur on the client and server. Track the total amount of hitches across your game over time so you can see if you've broken something, or fixed them in your latest patch.

Too many game instances on server

Problem: You get bad performance because you are running too many game instances on a bare metal machine or VM.

Capacity planning is an important part of launching a multiplayer game. This includes measuring the CPU, memory and bandwidth used per-game instance, so you can estimate how many game server instances you can per-machine.

This becomes especially difficult when your game runs across multiple clouds, or a mix of bare metal and cloud, for example with a hosting provider like Multiplay. Now your server fleet is heterogenous, with a mix of different server types, each with different performance.

I've seen teams address this differently. Some teams prefer to standardize on a single VM as their spec with a certain amount of CPU and memory allocated, and refuse to think about multithreading at the game instance level. Other teams run multiple instances in separate processes on the same machine, trusting the OS to take care of it. I've also seen teams run multiple instances of the server in the same process with shared IO that distributes packets to game instances pinned to specific CPUs. This gives developers greater control over scheduling between game instances, but if the multi-instance server process crashes, all instances of the game server go down.

Solution: Make sure you have analytics visibility not only at the game server instance level, but also to the underlying bare metal or VM. If you see game instances running on the same underlying hardware having low frame rate or hitches at the same time, check to see if it's caused by having too many game server instances running on that machine.

Noisy neighbors on cloud

Problem: Random bad performance on some server VMs.

You might have a noisy neighbor problem. Another customer is using too much CPU or networking resources on a hardware node shared with your VM.

Solution: Configure a single tenant arrangement with your cloud provider, or rent larger instance types that map to the actual hardware and slice them up into multiple game server instances yourself.

Defective bare metal machine in server fleet

Problem: A bare metal machine in your fleet is overheating, causing bad performance for all matches played on it.

I've seen this frequently, and although you might think that bare metal hosting providers like Multiplay would perform their own server health checks for you, the "trust, but verify" adage definitely applies here.

I've seen bare metal servers in the fleet that overheat as matches are played on them because their fan is broken. Players keep getting sent to them in the overheated state and they experience hitching, low frame rates and bad performance that looks a lot like lag.

Solution: Implement your own server health checks to identify badly performing hardware, not only when it's initially added to the server pool, but also if it starts performing badly mid-match. Remember that if something can go wrong, with enough game servers in your fleet, it probably will. Automatically exclude bad servers from running matches until you can work with your hosting company to resolve the problem.

Low server tick rate

Problem: You have a low tick rate on your server.

Did you know that on average more latency comes from the server tick rate than the network?

For example, Titanfall 1 shipped with 10HZ tick rate. This means that a Titanfall 1 game server steps the world forward in discrete 100ms steps. A packet arriving from a client just after the server pumps recvfrom at the start of the frame has to wait 100ms before being processed, even if the client is sending packets to the server at a high rate like ~144HZ.

This quantization to server tick rate adds up to 100ms of additional latency on top of whatever the network delay is. The same applies on the client. For example, a low frame rate like 30HZ adds up to 33ms additional latency.

Solution: Increase your client frame rate and server tick rates as much as possible. At 60HZ ticks, the server adds only 16.67 additional latency worst case. At 144HZ a maximum of ~7ms additional latency is added. To go even further you can implement subticks, which means you process player packets immediately when they come in from the network, without quantizing socket reads to your server tick rate. See this article for details.

Didn't playtest with simulated network conditions during development

Problem: Your team spent the entire development play testing on LAN with zero latency, no packet loss and minimal jitter. Suddenly the game launches, and everything sucks, because now players are experiencing the game under real-world network conditions.

All multiplayer games play great on the LAN. To make sure your game feels great at launch, it's essential that you playtest with realistic network conditions during development.

Of course, this frustrates designers because now the game doesn't feel as good. But as my good friend Jon Shiring says: Yes. Get mad. The game doesn't play well. So change the game code and design so it does, because otherwise you're fooling yourself.

Solution: Playtests should always occur under simulated network conditions matching what you expect to have at launch. I recommend at least 50ms round trip latency, several frames worth of jitter, and a mix of steady packet loss at 1% combined with bursts of packet loss at least once per-minute. The designers must not have a way of disabling this. You have to force the issue.

Network conditions changed mid-match

Problem: Network conditions change mid-match leading to problems with your time synchronization and de-jitter buffers on client and server.

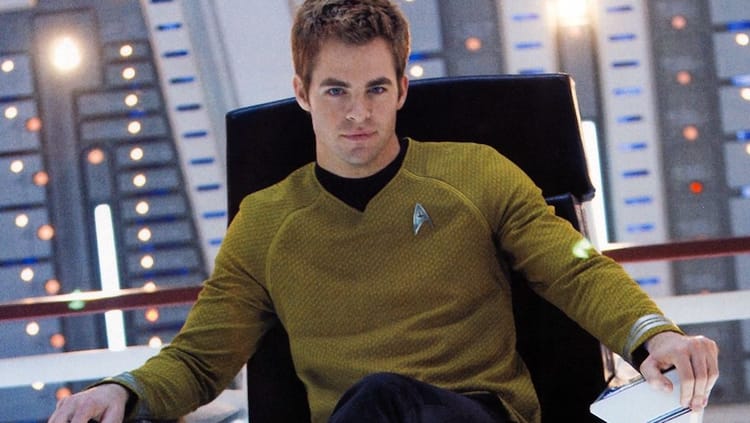

I've seen things you people wouldn't believe. Attack ships on fire off the shoulder of Orion. I watched C-beams glitter in the dark near the Tannhäuser Gate. All those moments will be lost in time, like tears in rain

If it can go wrong, with enough players, it will. The internet is best effort delivery only. I've seen many matches where players initially have good network performance and it turns bad mid-match.

Latency that goes from low to high and back again, jitter that oscillates between 0 and 100ms over 10 seconds. Weird stuff that happens depending on the time of day. Somebody microwaves a burrito and the Wi-Fi goes to hell.

Solution: Implement debug functionality to toggle different types of bad network performance during development. Toggle between 50ms to 150m latency. Toggle between 0ms and 100ms jitter. Make sure your time synchronization, interpolation buffers and de-jitter buffers are able to handle these transitions without freaking out too much. Add analytics so you can count the number of times packets are lost because they arrive too late for your various buffers. Be less aggressive with short buffer lengths and accept that a wide variety of network conditions demands deeper buffers than you would otherwise prefer.

Desync in client side prediction

Problem: The player sees occasional pops and warps because the client and server player code aren't close enough in sync.

In the first person shooter network model with client side prediction, the client and server run the same player simulation code, including movement, inventory and shooting, for the same set of delta times and inputs, expecting the same (or a close enough) result on both sides.

If the client and server disagree about the player state, the server wins. This correction on the client in this case can feel pretty violent. Pops, warps and being pulled back to correct state over several seconds can feel a lot like "lag".

Solution: It's vital that your client and server code are close enough in sync for client side prediction to work. I've seen some games only do the rollback if the client and server state in the past are different by some amount, eg. only if client and server positions are different by more than x centimeters. Don't do this. Always apply the server correction and re-simulation, even if the result will be the same. This way you exercise the rollback code all the time, and you'll catch any desyncs and pops as early as possible. Instrument your game so you track when corrections result in a visible pop or warp for the player, so you know if some code you pushed has increased or decreased the frequency of mispredictions.

Broken lag compensation in shooter

Problem: The player shoots and hits another player but doesn't get credit for the shot.

Nothing gets serious first person shooters screaming "lag" faster than filling another player with bullets but not getting credit for the kill.

Because of this top-tier first person shooters like Counterstrike, Valorant and Apex Legends implement latency compensation so players get credit for shots that hit from their point of view.

Behind the scenes this is implemented with a ring buffer on the server containing historical state for all players. When the server executes shared player code for the client, it interpolates between samples in this ring buffer to reconstruct the player's point of view on the server, temporarily moving other players objects to the positions they were in on the client at the time the bullet was fired.

If this code isn't working correctly, players become incredibly frustrated when they don't get credit for their shots, especially pro players and streamers. And you don't want this. ps. I accidentally once broke lag comp in Titanfall 2 during development, and the designers noticed the very same day, in singleplayer.

Solution: Implement a bunch of visualizations and debug tools you can use to make sure your lag compensation is working correctly. If possible, implement analytics to detect "fake hits" which are hits made on the client that don't get credit on the server. If these go up in production, you've probably broken lag comp.

Lag switches

Problem: Players are using lag switches.

Somebody is being a dick and using a lag switch in your game. When they press the button, packets are dropped. In the remote view their player warps around and is hard to attack.

Solution: The fix depends on your network model. Buy some lag switches and test with them during development and make any changes necessary so they don't give assholes any advantage.

No servers near player

Problem: Players are getting a bad experience because there aren't any servers near them.

The speed of light is not just a good idea. It's the law.

While a network accelerator certainly can help reduce latency to a minimum, players in São Paulo, Brasil are never going to get as good an experience playing in Miami as they do on servers in São Paulo. Similarly, players in the Middle East could play on Frankfurt, but they'll get a much better experience with servers in Dubai, Bahrain or Turkey.

Solution: Instrument your game with analytics that include the approximate player location (latitude, longitude) using an ip2location database like maxmind.com so you can see where your players are, then deploy servers in major cities near players.

Matchmaker sends players to the wrong datacenter

Problem: Your matchmaker sends players to the wrong datacenter, giving them higher latency than necessary.

While players in Colombia, Equador, Peru are technically in South America, you shouldn't send them to play on servers in São Paulo, Brasil, because the Amazon rainforest is in the way.

Several games I've worked with end up hardcoding the upper north east portion of South America to play in Central America region (Miami), instead of sending them to South America (São Paulo). Somewhat paradoxical, but it's true. Don't assume that just selecting the correct region from a player's point of view (South America) always results in the best matches.

Solution: Instrument your game with ip2location (lat, long) per-player. Implement analytics that let you can look on a per-country basis and see which datacenters players in that country play in, including the % of players going to each datacenter, and the corresponding average latency they receive. Keep an open mind and tune your matchmaker logic as necessary.

Partying up with friends who are far away

Problem: A player in New York parties up with their best friend in Sydney, Australia and plays your game.

File this one under "impossible problems". You really can't tell players they can't party up with a friend on the other side of the world, even though it might not be the smartest idea in your hyper-latency sensitive fighting game – you just have to accommodate it as best you can.

Solution: Make your game as latency tolerant as possible. Regularly run playlists with one player having 250ms latency so you know how it plays in the worse case, both from that player's point of view, and from the point of view of other players in the game with them. Make sure it's at least playable, even though it's probably not possible for the experience to be a great one. Sometimes the only thing you can do is put up a bad connection icon above players with less than ideal network conditions, so the person's connection gets the blame instead of your netcode.

Latency divergence

Problem: Players are whiplashed between different server locations in the same region, each with radically different network performance, and get a pattern of good-bad-good-bad matches when playing your game.

I once helped a multiplayer game that was load balancing players in South America across game servers in Miami, and São Paulo. Players in São Paulo, depending on the time of day and the player pool available, would get pulled up to Miami to play one match at 180ms latency, and then the next match they'd be back on a São Paulo server with 10ms latency. This was happening over and over.

Solution: Latency divergence is the difference between the minimum and maximum latency experienced by a player in a given day. Extend your analytics to track average latency divergence on a per-country or state basis, and if you see countries with high latency divergence, adjust your matchmaker logic to fix it.

Unfair advantage for low latency players

Problem: "Low ping bastards" have too much of an advantage. Regular players get frustrated and quit your game.

It's generally assumed that players with lower pings have an advantage in competitive games, and will win more often than not in a one-on-one fight with a higher ping player. It's your job to make sure this is not the case.

Ideally, your game plays identically in some window: [0,50ms] latency at minimum, and perhaps not quite as well but acceptably so at [50ms,100ms], only degrading above 100ms.

In this case, the goal is to make sure that the kill vs. death ratio for latency buckets below 100ms are close to identical, although players above 100ms might have some disadvantage, do your best to ensure that disadvantage isn't too much.

Solution: Instrument your game with analytics that track key metrics like damage done, damage taken, kills, deaths, wins, losses, and bucket them according to player latency at the time of each event. Look for any trend that indicates an advantage for low latency players and fix it with game design and netcode changes.

Unfair advantage for high latency players

Problem: Really high ping players have too much advantage.

This problem is common in first person shooters with lag compensation. In these games, the server reconstructs the world as seen by the client (effectively in the past) when they fired their weapon.

The problem here is that if a player is really lagging with 250ms+ latency, they are shooting so far in the past, they end up having too much of an advantage over regular players, who feel that they are continually getting shot behind cover and cry "lag!".

Solution: Cap your maximum lag compensation window. I recommend 100ms, but it should probably be no more than 250ms worst case. It's simply not fair for high latency players to be able to shoot other players so far in the past.

Wrong network model

Problem: You chose the wrong network model for your game.

If you choose the wrong network model, some problems that feel like lag can't really be solved. You'll have to re-engineer your entire game to fix it, and until then your players are left with a subpar experience.

Two classic examples would be:

- Shipping a fighting game without using a GGPO style network model. Hello Multiversus.

- Shipping a hyper-competitive eSports first person shooter without using the Quake network model with client side prediction and lag comp.

Solution: Read this article before starting your next multiplayer game.

No input delay

Problem: You have tightly coupled player-player interactions but don't have any input delay.

You can't client side predict your way out of everything.

If your game has mechanics like block, dodge and parry, chances are that players are going to notice inconsistencies in the local prediction vs. what really happened on the server and cry "lag".

Solution: Consider adding several frames of input delay to improve consistency in games with tightly coupled player-player interactions. Major fighting games do this. Don't feel bad about it.

Player died and is frustrated

Problem: Gameplay elements are causing players to feel lag.

Legend has it that the Halo team at Bungie would perform playtests with a lag button that players could smash anytime they felt "lag" in the game.

Lag button usage was recorded and timestamped, so after the play test they could go back and watch a video of the player's display leading up to the button press to see what happened.

Sometimes the lag button would correspond to putting up a shield but getting hit anyway. So the Bungie folks would go and fix this, and then test again. And again. And again. Until eventually the players would only press the lag button when they died.

Solution: When lag corresponds only to dying in the game, you know that you have truly won the battle!

How's that for zen?