The case for network acceleration in multiplayer games

I'm Glenn Fiedler and welcome to Más Bandwidth, my new blog at the intersection of game network programming and scalable backend engineering.

Consider this scenario. You've just spent the last 3 years of your life working on a multiplayer game. Tens of millions have been spent developing it, plus it's competitive, so you still have the cost of dedicated servers ahead of you.

You've planned your server locations, budgeted for server costs, and considering the cost of egress bandwidth from clouds and the amount of bandwidth your game uses, you've selected an appropriate mix of bare metal and cloud.

You have retention, engagement and monetization strategies in place so you should be able to pay for your servers and turn a healthy profit, as long as the game is sticky enough and players don't churn out.

Launch is coming up. You're nervous, but at the same time you're confident you've done everything you can:

- Your game is incredibly fun. You've play tested the game with your team all the way through development. You've just gone through closed beta with thousands of players and everything looks great. All signs point to success.

- You've selected a great set of server locations around the world with an excellent mix of hosting companies (Google Cloud, AWS, Datapacket, Zenlayer and i3D).

- Your game plays perfectly well under a certain amount of latency (50ms), and still plays well up to ~100ms, only starting to degrade above that.

- You've tested the game extensively in take home tests and in beta with no complaints about lag from players. You've even played matches between EU and USA with no problems.

- Your netcode uses UDP and has some amount of redundancy designed in so essential data is included in the very next packet when one packet is lost.

- You've instrumented your game with analytics so you have some visibility in what is going on during beta and post launch. Latency, jitter, packet loss, frame rate and any hitches on client and server side are all tracked.

And then you launch

There's an initial burst of players and success is within your grasp! But, retention isn't where it needs to be, players are churning out, and your subreddit is full of complaints about lag.

Players are furious and demand that you "fix the servers".

You look at your server bill and you're pretty sure they're not low quality servers.

A chorus grows, and the engagement and monetization metrics come in. They're not tracking where they need to be. Your game is at risk of failure.

You look at your analytics and see that:

- Average latency is good (~36ms)

- Average packet loss is low (~0.15%)

- Average jitter is low (~10ms, less than one frame @ 60 fps)

But players are still complaining about lag. What's going on?

Rule #1: Averages Lie

Approximately 50% of players have above average latency.

You generate the distribution of latency around the world, sampled every 10 seconds during play and sum it up according to the amount of play time spent at each latency bracket:

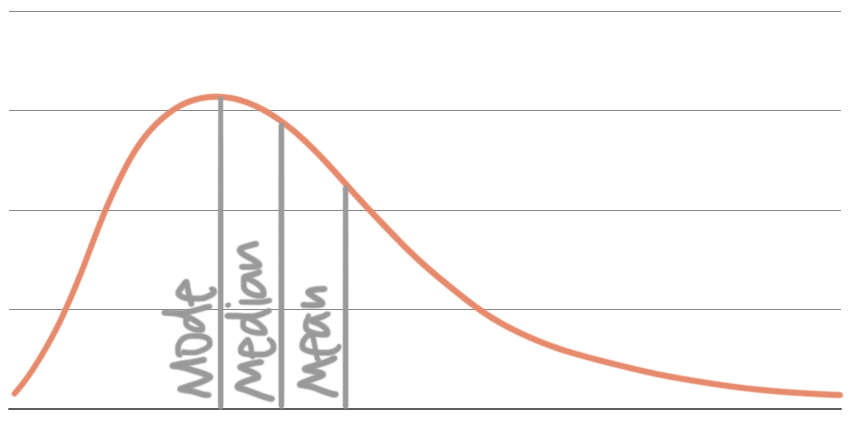

It's a right-skewed distribution rather than a normal distribution and it has a long tail. This means that a significant percentage of playtime is spent at latencies above where your game plays best.

Although packet loss on average is 0.15%, you find that some players have minimal or no packet loss, and many players have intermittent bursts of packet loss. Only rarely do you see a player with high packet loss for the entire game.

Because most of the time packet loss is zero and it occurs in clumps, the average packet loss % is not really representative of its effect on your game. You change your metric to count the number of packet loss spikes in player sessions and it's clear that packet loss is having a significant impact.

Looking at jitter, you see that many people have perfectly reasonable jitter (<16ms), but others have large spikes of jitter mid game, and some players have high jitter for the entire match. Interestingly, although average jitter on Wi-Fi is more than twice that of wired, many players you see with high jitter are playing over wired connections.

Digging in further:

- Some players have really high latency, packet loss or jitter.

- Latency, jitter and packet loss can be variable during a match, starting off good and then getting worse mid-game, or vice-versa.

- Many players get inconsistent performance, where the game plays well most of the time, but one in every n matches, they get bad network performance, even though they're playing on servers in the same datacenter.

- Bad network performance seems more common during peak play-time (Friday, Saturday nights), perhaps indicating that congestion could be the root cause.

- Around 10% of players are experiencing bad network performance at any time.

You start looking on a per-player basis and you notice:

- The bad network performance moves around. It's not the same 10% getting bad network performance all the time.

- Around 60% of players have bad network performance at least once a month.

- Bad network performance doesn't just affect the player having it, but also their team mates and opponents in matches.

From this point of view, it's fair to say it's affecting your entire player base.

What's causing all this?

It's easy to dismiss all of this as just issues at the edge. Perhaps some players are just unlucky and have bad internet connections. But if that were the case, why is a player's connection good one day, but terrible the next?

The thing to understand here is that the Internet is not the system that we imagine it to be, one that consistently delivers packets with the lowest latency. Instead, it's more like an amorphous blob comprised of 100,000+ different networks (ASNs) that don't really coordinate in any meaningful way to ensure that packets always take the lowest latency path. Packets take the wrong path, routes are congested, and misconfigurations and hairpins happen all the time.

The bad network performance is a property of the Internet itself.

You can confirm this yourself by running an experiment. Host your game servers with different hosting companies. Measure performance for each hosting company across your player base (you'll need 10k+ CCU over a month to reproduce this result). No matter which hosting company you choose, you'll find roughly 10% of players worldwide getting bad network performance at any time, and around 60% of players get bad network performance every month.

Really?!

This is a lot to take in. The internet is not an efficient routing machine and makes mistakes. Yes, everything is distributed and nobody is to blame, but the end result is clear – best effort delivery doesn't take any amount of care to make sure your game packets are delivered with the lowest possible latency, jitter and packet loss.

Let's build up this understanding with an example, so you can see how easily it is for the internet to make bad routing decisions, without any ill intent.

Imagine you have three ISPs at your home:

- Fiber internet

- Cable internet

- Starlink

You want to use all your internet connections, you're paying for them after all, so you setup multi-WAN and your router distributes connections across all three ISPs using a hash of the destination IP address and ports.

Then you play some multiplayer games and quickly notice that around 1 in 3 play sessions feel great, but the rest just aren't as good. What's going on? Simple. When you finish each match, you connect to a new server and your destination IP address and port change, resulting in a different hash value. This hash value modulo 3 is used by your router to select which ISP to use, and each of your ISPs have different properties, they don't have exactly the same network performance!

In this case, it's an easy fix. You create a new router rule to map UDP traffic to your fiber internet connection, splitting the rest between cable internet and Starlink. Problem solved!

Now step back even further. Imagine you're an ISP. You have multiple upstream transit provider links that can be used, and you have no way to know which one is really best for the destination IP address. In fact, your incentive is perverse, get the packet off your network as quickly and cheaply as possible, so it doesn't cost you money.

Even worse, it's now basically impossible to detect which applications are games, and which are throughput oriented applications like large downloads, so even if you did know which upstream links were the fastest for a particular destination, you wouldn't know which packets should be sent down them, and you can't send just send all UDP traffic down the fastest link.

So you load balance. You take a hash and you modulo n.

Extend this for every hop in the trace route between your client and server.

And now you understand why the Internet has such strange behavior, where bad network performance moves around like weather and players get good network performance one match, and terrible the next.

Solutions

As game developers, we all want players to have the best experience: the lowest possible latency, jitter and packet loss and consistency from one match to the next.

And it's no surprise that bad network performance frustrates and churns players, so there's a financial incentive for us to solve this as well.

There's even an existence proof for a solution. In the early days of League, bad network performance was so severe that Riot built their own private Internet, Riot Direct to fix it.

Today, Riot Direct accelerates network traffic for League of Legends and Valorant and helps protect against DDoS attacks, greatly improving the player experience. I think in this case the results clearly speak for themselves.

For everybody else who can't afford to build their own private internet, there's a few things we can do:

- Packet tagging reduces jitter over Wi-Fi today, and as it is adopted by ISPs over the next 5 years, it should fix network issues at the edge on the ISP DOCSIS (cable) network, but it won't fix everything end-to-end across IP. Make sure it's user toggle-able in settings because it doesn't play nicely with some old routers. Note that this is automatically supported on Xbox GDK if you use the official game port. See this page for more details.

- L4S (5-10 years), should help address congestion over IP networks. Mostly by stopping TCP absolutely filling up and congesting links with its sawtooth bandwidth usage for non-latency sensitive traffic, but also for games which can use it to detect when they are sending too much bandwidth and overloading the link between client and server. Read more about L4S at CableLabs.com

- Subvert the hash. Deploy relays in major cities between clients and servers (one hop) and ping the relays from your game client. Steer traffic through a relay when it significantly reduces latency vs. directly talking to the server. This simple step can subvert the hash and force game traffic to take the lowest latency route.

- Packet steering through multiple relays. Obtain benefit from a diversity of many different networks. Setup relays from 10-20 different hosting companies in major cities and run a route optimization algorithm to find routes across multiple relays when they're significantly faster than the direct route. In other words, steer traffic through AWS or Google on the way to your bare metal server, or vice-versa when it provides improvement. Surprisingly effective.

- Multipath to reduce packet loss and jitter. Send game packets across several different routes at the same time using relays, such that each route is unlikely to have packet loss at the same time. Uses extra bandwidth but significantly reduces packet loss and jitter for your game. This approach is incredibly effective for fighting games because of their low bandwidth usage.

Network Acceleration with Network Next

Network Next is my startup. We implement all of the strategies above to fix bad network performance for your multiplayer game. Not only do we see huge improvements around the world in regions like South America, Central America, Middle East and Asia Pacific, but also in the USA, Canada and Europe. Bad network performance is everywhere.

Network Next is available as an open source SDK in C/C++ or as a drop-in UE5 plugin that integrates with your game and takes over sending and receiving UDP packets.

When a better route is found than the default internet route (subject to hashing and modulo n), the SDK automatically steers your game packets across relays to fix it, including multipath if you enable it – to reduce latency, jitter and packet loss for your players.

The Network Next backend is implemented in Golang and runs in Google Cloud. It's load tested up to 25 million CCU and is extremely robust and mature. We've been doing this since 2017 and have accelerated more than 50 million unique players, including professional play for ESL Counterstrike Leagues (we are the technology behind ESEA FastPath).

We can run a Network Next backend and relay fleet for your game with white glove service for a small monthly fee, or for larger studios you can license the backend and get full source code and operate the whole system yourself.

Please contact us if you'd like to try out Network Next with your game.